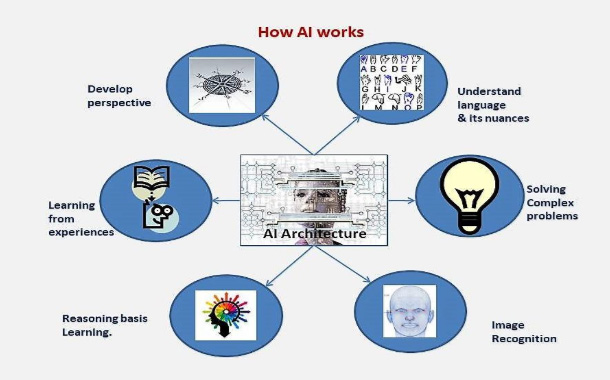

Oxford dictionary definition : The theory and development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.

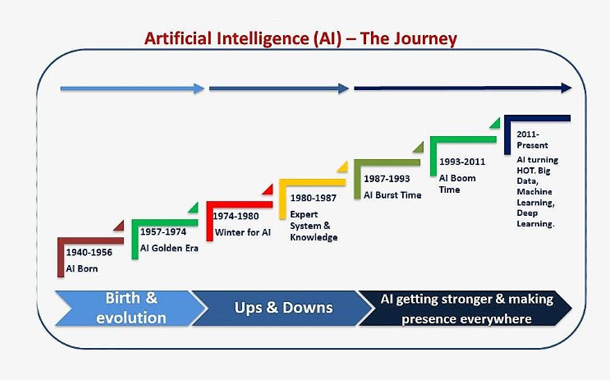

Artificial Intelligence (AI), in recent time, has been a buzzword across length and breadth of day to day lives as well as business world. But not many know that AI has a long history of its evolution. It had its own ups and downs before reaching this pinnacle. In this piece of text, we will touch base this roller-coaster ride, AI passed through to reach where it is today.

The birth of Artificial Intelligence (AI), as per Wikipedia, said to be in the years between 1940s and 50s, when a group of brilliant scientists from various fields like mathematics, psychology, engineering, economics & political science, started discussions on the possibility if there can be an artificial brain. But factually it was in the year 1956, when the field of artificial intelligence research was formally accepted as an academic discipline.

Golden Era of AI: This period of AI; which reigned from year 1956 to 1974, called as Golden era. Now years after the Dartmouth conference, in 1956, was a period of new discovery, of exploring across this nascent field. The computer programs that were developed during this time were, to most people in that period, simply “astonishing” as computers were able to solve algebra word problems, proving theorems in geometry and learning to speak English. Few at the time would have believed that such “intelligent” behavior by machines was possible at all. Researchers expressed an intense optimism in private and in print, predicting that a fully intelligent machine would be built by 20 years from then. Government agencies like DARPA started funding the research work in this new field.

AI Winter (1974-80): The period from 1974 till 1980 was a period called Winter of AI, where setting up very high expectations from use of AI failed to take off due to many reasons. These reasons probably were:

- Lack of high computing power required for AI.

- Intractability which requires once again huge amount of processing and data underneath,

- Commonsense knowledge and reasoning which again needs a lot of data to be taken into consideration for the purpose of learning and computer memory and storage was scarcity and had very high cost

- Due to technological bottleneck, a supposedly simple task like recognizing a face or crossing a room without bumping into anything was extremely difficult. This clearly explains why research into vision and robotics had made so little progress by the middle 1970s.

During this period the developments in AI went lowest level again as funding stopped as so many hurdles came up slowing down the progress.

AI the EXPERT & KNOWLEDGE in 1980-1987. In the 1980s a form of AI program called “expert systems” was adopted by corporations around the world and knowledge Systems became the focus of mainstream AI research. In those same years, the Japanese government aggressively funded AI with its fifth generation computer project. Another encouraging event in the early 1980s was the revival of connectionism in the work of John Hopfield and David Rumelhart. Once again, AI had achieved success this time.

AI burst time-1987-1993: And now it was burst time in the field of AI and it started from 1987 till 1993. The business community’s fascination with AI was like a sine wave in the 80s in the classic pattern similar to Economic Bubble. The collapse was in the perception of AI by government agencies and investors, yet, the field continued to make advances despite the criticism and setbacks. Rodney Brooks and Hans Moravec, the two iconic researchers from the related field of AI & robotics, demanded for an entirely new approach to artificial intelligence.

AI boomed 1993-2011: And here the boom that never looked back started in the field of AI. Now more than a half a century old, AI finally achieved some of its oldest intended goals. It began to be used successfully throughout the technology industry, although somewhat behind the scenes. Some of the success was due to increasing computer power and some was achieved by focusing on specific isolated problems and pursuing them with the highest standards of scientific accountability. Still, the reputation of AI, in the business world at least, was less than pristine. Inside the field there was little agreement on the reasons for AI’s failure to fulfill the dream of human level intelligence that had captured the imagination of the world in the 1960s. Together, all these factors helped to fragment AI into competing subfields focused on particular problems or approaches, sometimes even under new names that disguised the tarnished pedigree of “artificial intelligence”. AI was both more cautious and more successful than it had ever been.

And Artificial Intelligence turning into hot terms like Machine Learning (ML), Deep learning, Big Data; 2011–present:

The era beyond 2000 gave unprecedented faster computing power, cheaper memory and storage for data . In the first decades of the 21st century, these made easy access to large amounts of data, whether structured or unstructured, (known as “big data”), faster computers and advanced machine learning techniques using advanced programming languages were successfully applied to many problems throughout the economy. In fact, McKinsey Global Institute estimated in its famous paper “Big data: The next frontier for innovation, competition, and productivity” that “by 2009, nearly all sectors in the US economy had at least an average of 200 terabytes of stored data”. This, later was not only true for US but also for other countries like China, UK and other European countries. India too had its own important share in this journey. China is expected to dominate AI research and application specially in Mobiles.

By 2016, the market for AI related products, hardware and software reached more than 8 billion dollars and the New York Times reported that interest in AI had reached a “frenzy”. The applications of big data began to reach into other fields as well, such as training models in ecology] and for various applications in economics. Advances in deep learning (particularly deep convolutional neural networks and recurrent neural networks) drove progress and research in image and video processing, text analysis, and even speech recognition.

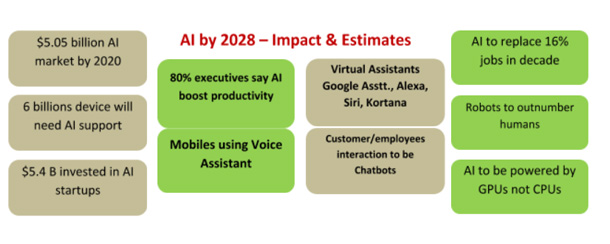

AI Impact : The impact is huge and would further dominate every aspect of life, at home, at journey, at restaurant, at works, at airport, in flight and almost everywhere. The few statistics here say it all if one can believe :

Microsoft co-founder Bill Gates recently quoted artificial intelligence “the holy grail that anyone in computer science has been thinking about” during Vox Media’s Code Conference.

Artificial Intelligence would influence each and every part of life, business world and healthcare like never before. AI and machine/deep learning use cases are innovatively unlimited and will match our imagination. But a negative aspect which is going to impact is the elimination of use of manpower. Many famous researchers and Tech personalities are of opinion to limit its use where it does not become reason for unemployment. Though unemployment is a big concern but it will generate employment also. But both sides are entirely different. Industry is also watching closely and is excited to use AI across business life cycle.