Research from Barracuda and leading security analysts such as Forrester shows an increase in email attacks like spam and phishing since ChatGPT’s launch.

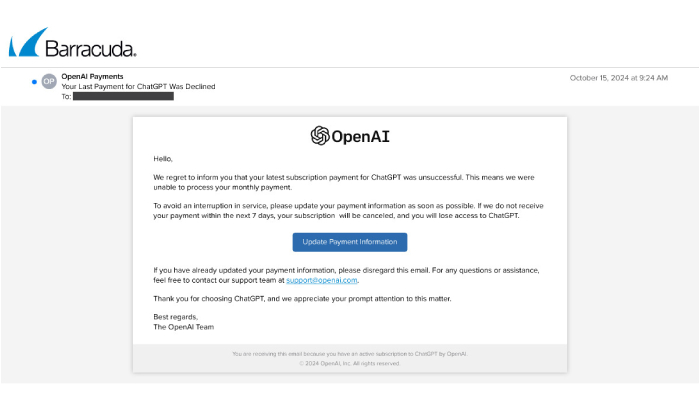

Barracuda threat researchers recently uncovered a large-scale OpenAI impersonation campaign targeting businesses worldwide. Attackers targeted their victims with a well-known tactic — they impersonated OpenAI with an urgent message requesting updated payment information to process a monthly subscription.

This phishing attack included a suspicious sender domain, an email address designed to mimic legitimacy, and a sense of urgency in the message. The email closely resembled legitimate communication from OpenAI but relied on an obfuscated hyperlink, and the actual URL differed from one email to another. We’ll break down these elements to help you better understand how attackers are evolving and what to look out for.

Since the launch of ChatGPT, OpenAI has sparked significant interest among both businesses and cybercriminals. While companies are increasingly concerned about whether their existing cybersecurity measures can adequately defend against threats curated with generative AI tools, attackers are finding new ways to exploit them. From crafting convincing phishing campaigns to deploying advanced credential harvesting and malware delivery methods, cybercriminals are using AI to target end users and capitalize on potential vulnerabilities.

Elements of the phishing attack

When Barracuda’s analysts analyzed the OpenAI impersonation attack, the volume of emails sent was significant, but the lack of sophistication was surprising. This attack was sent from a single domain to over 1,000 recipients. The email did, however, use different hyperlinks within the email body, possibly to evade detection. Following is a list of high-level attributes from the email that break down the phishing characteristics:

1. Sender’s email address

- The email is from info@mta.topmarinelogistics.com, which does not match the official OpenAI domain (e.g., @openai.com). This is a significant red flag.

2. DKIM and SPF records

- The email passed DKIM and SPF checks, which means that the email was sent from a server authorized to send emails on behalf of the domain. However, the domain itself is suspicious.

3. Content and language

- The language used in the email is typical of phishing attempts, urging immediate action and creating a sense of urgency. Legitimate companies usually do not pressure users in this manner.

4. Contact information

- The email provides a recognizable support email (support@openai.com), adding legitimacy to the overall message. However, the overall context and sender’s address undermine its credibility.

Impact of GenAI on phishing

Research from Barracuda and leading security analysts such as Forrester shows an increase in email attacks like spam and phishing since ChatGPT’s launch. GenAI clearly has an impact on the volume of the attacks and the ease with which they are created, but for now cybercriminals are still primarily using it to help them with the same tactics and types of attacks, such as impersonating a well-known and influential brand.

The 2024 Data Breach Investigations Report by Verizon shows that GenAI was mentioned in less than 100 breaches last year. The report states, “We did keep an eye out for any indications of the use of the emerging field of generative artificial intelligence (GenAI) in attacks and the potential effects of those technologies, but nothing materialized in the incident data we collected globally.” It further states that the number of mentions of GenAI terms alongside traditional attack types and vectors such as phishing, malware, vulnerabilities, and ransomware was low.

Similarly, Forrester analysts observed in their 2023 report that while tools like ChatGPT can make phishing emails and websites more convincing and scalable, there’s little to suggest that generative AI has fundamentally changed the nature of attacks. The report states, “GenAI’s ability to create compelling text and images will considerably improve the quality of phishing emails and websites, it can also help fraudsters compose their attacks on a greater scale.”

That said, it’s only a matter of time before GenAI advancements lead attackers to significant new and more sophisticated threats. Attackers are undoubtedly experimenting with AI, though, so it’s better for organizations to get ready now. Staying vigilant about traditional phishing red flags and strengthening basic defenses are still some of the best ways to guard against evolving cyber risks.

How to protect against these attacks

Here are a few strategies to help you get ahead of this evolving threat:

Deploy advanced email security solutions. AI-powered tools that leverage machine learning will detect and block all email threat types, including those that leverage AI. These solutions analyze email content, sender behavior, and intent to identify sophisticated phishing attempts, including those that mimic legitimate communication styles.

Ensure continuous security awareness training. Regularly train employees to recognize phishing attacks and the latest tactics used by cybercriminals. Emphasize the importance of scrutinizing unexpected requests, verifying email sources, and reporting suspicious activity. Use simulated phishing attacks to reinforce learning.

Automate your incident response. Post-delivery remediation tools can help minimize the impact of attacks that get through your defenses. Deploy a solution that will help respond to email incidents in seconds by identifying and removing all copies of malicious and unwanted mail.